Discord’s age verification rollout has been met with… shall we say, dismay by many users of the platform, with many hunting for a better, more privacy-focused alternative.

The news was even less well-received when Discord informed some UK users that they may be part of an “experiment” with an age verification provider called Persona, the lead investor of which, in its most recent rounds of capital funding, was a venture fund co-founded and directed by none other than Peter Thiel.

You know, the co-founder of Palantir, a surveillance technology firm that’s been hitting headlines recently for working on apps to help track targets of the US government’s deportation efforts. And claims that it may compile databases from the private information of US citizens. Naturally.

Discord later said that it had concluded testing with Persona’s platform. Anyway, security and private data concerns around Persona’s data verification efforts have been spreading, and now three security researchers say they’ve discovered a Persona frontend that was exposed to the open internet on a US government-authorised server (via Rage).

Quoting directly from the researcher’s blog, the team says its work was supposed to be a “passive recon investigation,” which quickly turned into “a rabbit hole deep dive into how commercial AI and federal government operations work together to violate our privacy every waking second.”

“We didn’t even have to write or perform a single exploit, the entire architecture was just on the doorstep,” claims the team.

“53 megabytes of unprotected source maps on a FedRAMP government endpoint, exposing the entire codebase of a platform that files Suspicious Activity Reports with FinCEN, compares your selfie to watchlist photos using facial recognition, screens you against 14 categories of adverse media from terrorism to espionage, and tags reports with codenames from active intelligence programs.

“2,456 source files containing the full TypeScript codebase,” the blog continues. “Every permission, every API endpoint, every compliance rule, every screening algorithm. Sitting unauthenticated on the public internet. On a government platform no less.”

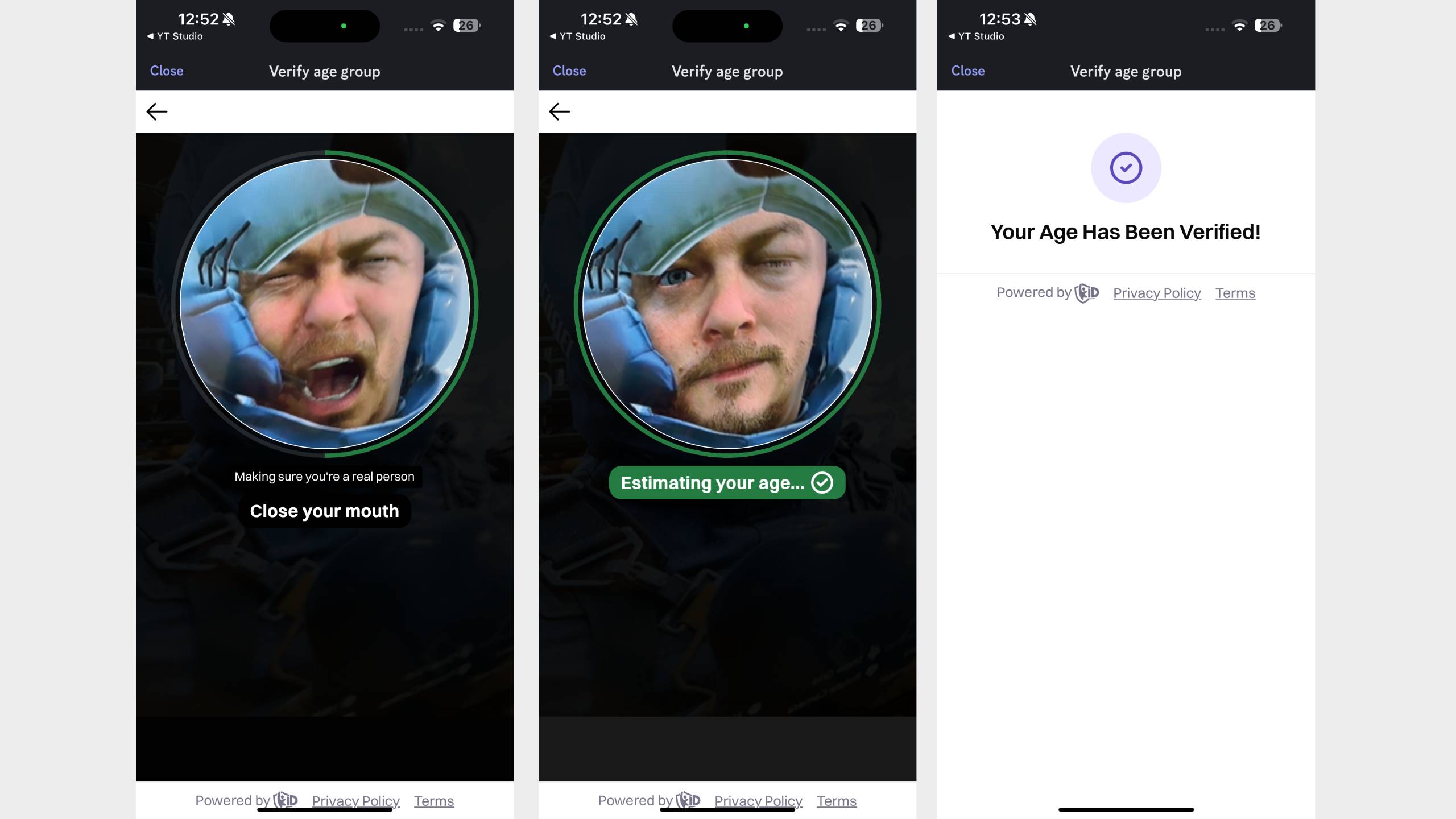

Beyond the astonishing thought that such data could be accessed so easily, it certainly seems like Persona operates more deeply than anyone would reasonably expect. The researchers say that the full verification program performs 269 individual verification checks across 14 check types, including “SelfieSuspiciousEntityDetection”.

“What makes a face ‘suspicious?'”, say the researchers. “The code doesn’t say. The users aren’t told.”

What we’re often told, however, is that age verification is in our best interests, in an effort to prevent children from watching harmful content. Still, it doesn’t take a genius to realise that there’s a whole lot more value in facial recognition data than simply verifying that someone’s old enough to view adult material.

How much of this leak applies to Discord’s earlier testing is unclear. However, it’s an excellent example of why privacy advocates have been vocally uncomfortable with the idea of current digital age verification methods, and why you should be very, very picky about who you hand your data over to. If, let’s be honest, anyone at all.